Under what conditions can artificial intelligence (AI) be beneficial and useful for humanity without comprising a threat for personal (ή individual) freedoms and democracy itself?

What is the real potential and boundaries of “smart machines”?

How far away are we from the creation of “super-smart” systems?

How far away are we from the creation of such systems, which however, entail a risk of at some point of slipping from human control?

Can Greece, realistically, hope to become an equal “player” in a coming AI economy?

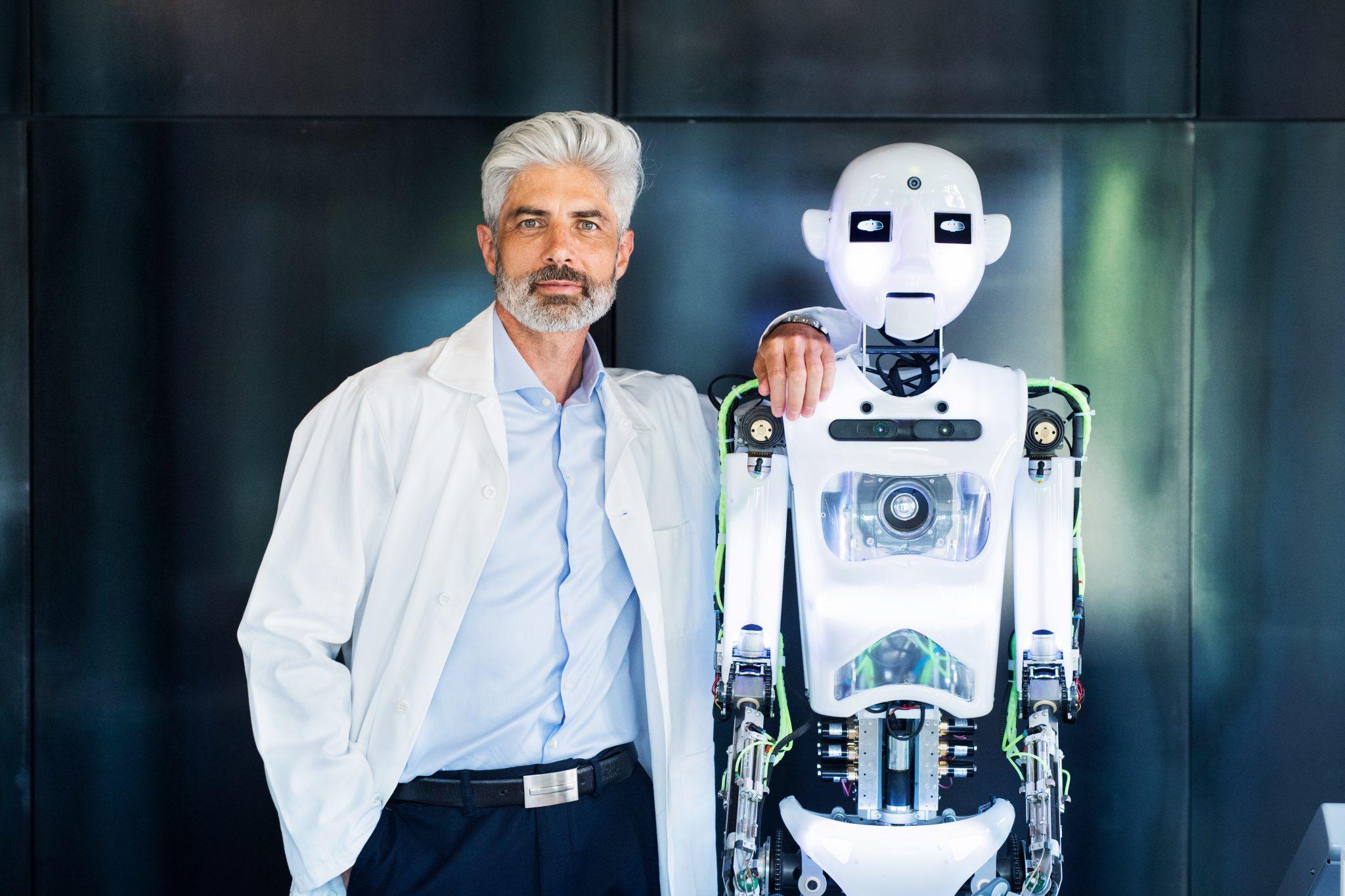

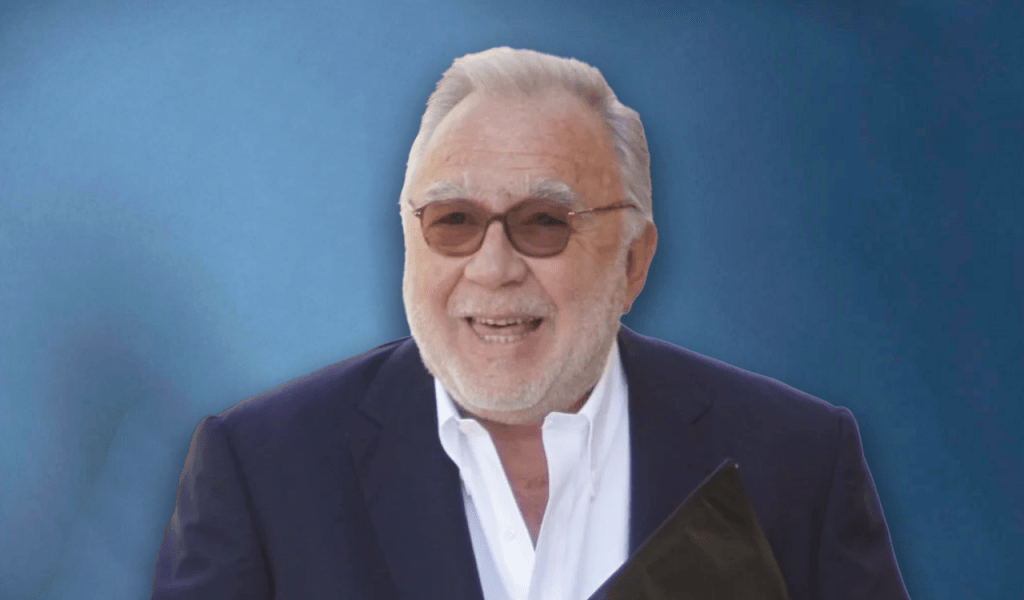

Prof. John Tasioulas is a professor of ethics and philosophy of law at Oxford, the director of the Institute for Ethics in AI, as well as the chair of the ELONTech Advisory Board.

The term ‘Machine Ethics’ was introduced a couple of decades ago on purpose of “ensuring that autonomous AI systems behave in an ethical fashion when interacting with human beings.” Do you actually believe that there can be autonomous AI systems in the future? And if yes, in what sense?

‘Autonomy’ is a slippery notion with multiple senses, but I do think there are kinds of ‘autonomy’ that have already been achieved by AI systems, or soon will be achieved. There is a minimal sense of ‘autonomy’ exhibited by many AI systems, such as driverless cars or autonomous weapons systems, whereby they can make a decision without the need for, or perhaps even the possibility of, direct intervention by a human being. But there is also a richer sense of autonomy that AI systems have achieved. AI systems based on machine learning can make decisions which fulfil the goals that the designers have set for them, but where the decisions were not foreseen or predictable by those designers. Classic examples here are game-playing algorithms developed by DeepMind which made moves in games such as Breakout and Go that took the designers themselves by surprise. Indeed, Lee Sedol, the world champion of Go, who was defeated by AlphaGo, said the experience of playing against such a system convinced him that it was genuinely ‘creative’.

Immanuel Kant described autonomy as “the property of the will by which it is a law to itself (independently of any property of the objects of volition).” Could AI systems develop free will in the remote future?

It would be rash for a philosopher, or perhaps anyone else, to try to predict what AI developments might eventuate in the remote future. I take it that the Kantian notion of autonomy consists in the capacity to step back from any inclination or desire one might have, including those inculcated in us by our upbringing, and engage in reasoning about whether they ought to be followed. Unlike other beings known to us, humans can stand back from our motivations and subject them to rational scrutiny and freely decide in light of that assessment how to act. This capacity is the source of a special dignity that inheres in all human beings. It is important in this connection to mention two things. First, no AI system currently known to us exhibits anything remotely akin to this capacity for rational autonomy – they cannot reflect on the rational acceptability of the goals we have programmed into them. Second, even those leading AI scientists, such as Berkeley’s Stuart Russell, who have expressed alarm at the prospect of super-intelligent AI systems eluding our control and posing an existential threat to humanity, still operate with a narrow sense of intelligence, i.e. the capacity of an AI system to achieve the goals that have been programmed into it rather than to subject those goals to critical evaluation. So Kantian AIs look like a far-off dream. And I worry that focusing unduly on this highly speculative possibility distracts us from the ethical challenges actually existing AI systems pose right for us in the here and now.

AI systems allegedly offer the advantage of impartiality and non-bias in decision making. Are there any pitfalls we should be concerned about, especially when it comes to the selection of personnel?

AI systems, if well-designed, can in principle help improve the quality of our decision-making. Clearly, there are many familiar human shortcomings which impair our decision-making – such as tiredness, self-interest, distracting emotional responses – to which AI systems are not susceptible. So AI systems have the potential to improve the effectiveness and efficiency of decision-making in many domains of human life, from hiring and medical diagnosis to transportation and the sentencing of criminals. But an AI algorithm’s effectiveness will depend on the quality of the data on which it is trained, and if this data itself is skewed in some way, then the algorithm will likely reproduce and even amplify those biases. A good example here is an algorithm that scrutinizes job applicants’ resumes on the basis of data regarding past hiring decisions that themselves reflect sexist hiring practices. And even if the data on which an algorithm is trained are cleansed of biases, it is possible that the algorithm is designed in such a way as to unfairly advantage some applicants as opposed to others, often without the designer being aware that this is what they are doing.

Over and above these concerns, we still have to confront the vital question of how much decision-making we ought to delegate to machines. After all, an AI system may generate a ‘correct decision’, but will it be able to explain and justify its decision in a way that satisfies those affected by it? Can it take responsibility for, and be held accountable for, its decision, in a way that a human decision-maker can? If the deployment of algorithmic decision-making is carried too far, the very idea of democracy may be imperiled, since this is about free and equal citizens engaging in self-government through collective decision-making, giving intelligible reasons to each other for their decisions. In short, we don’t just care about the quality of decisions are made, but how they are made. Because of this, we need to work towards a sound integration of AI systems into human decision-making, and not allow the former to supplant the latter wherever it yields adequate outputs.

AI is often discussed as raising certain concerns regarding integrity, security and autonomy issues, especially as related to vulnerable personal data – COVID-19 proximity detection and contact sensor devices are a good example for this. Are you concerned about AI safety related issues?

Certainly. Given that machine learning algorithms need to be trained on massive amounts of digital data, there are serious implications regarding our privacy and other civil liberties. This is well-known. But at the same time, we must avoid making a fetish of privacy or liberty. Privacy or liberty interests are one thing, but these are too often equated with rights to privacy or liberty. But rights differ from mere interests in that they always involve corresponding duties – and whether a duty exists has to be determined in part by reference to the costs of imposing such a duty. Some may feel a strong liberty interest in not wearing a mask or being unvaccinated against covid, but given the potential costs to others, it is highly doubtful that one has a right to be unmasked and unvaccinated during a pandemic. The emergence of new technologies such as AI can radically alter the balance of costs and benefits that shapes the content of our rights over time. For example, it is imperative that we explore the potential of big data allied to AI tools to drive important innovations in medical research. But, in doing so, I would stress three things. The first is that we need assurance that the goals being pursued are worthy ones, and that the AI techniques adopted have a reasonable prospect of achieving them. The covid-19 pandemic is instructive here: sadly, many of the AI predictive tools that were developed at great expense offered little help in diagnosing and triaging covid-19 patients, and in some cases were potentially harmful. The second is that we need to ask whether personal data is genuinely necessary to secure advances and not lazily assume that this is invariably so. Thirdly, it is vital to ensure robust forms of democratic oversight regarding the design, deployment, and operation of AI technologies reliant on vast quantities of data. Only in a truly democratic society do the institutional safeguards exist to enable us responsibly to harness the full potential of AI to advance human well-being.

What can AI teach us about human consciousness? Is there any possibility that AI machines will ever have a better understanding than us?

AI already confronts us with a new kind of intelligence that, in terms of its outputs, surpasses human intelligence in certain domains. AI tools such as AlphaFold, for example, use reinforcement learning to create new models for understanding the structure of protein. It has more than doubled the accuracy of previous methods, yielding valuable insights that hold out great promise in helping us cure diseases. Moreover, AI reaches these impressive results by means of methods – the discovery of highly complex statistical patterns that human intelligence cannot detect – that are not only radically different from human reasoning but which are largely opaque, even to the designers of the AI systems themselves. Because of this AI may result in seismic shifts in our picture of reality, since we now have a new and powerful non-human intelligence at work alongside us for the first time in history. But it will also pose a deep ethical challenge about what it means to be human in the age of AI. The emergence of AI threatens to dethrone humans from our status as the top dogs of cognition, while also threatening to alienate us from a world in which intellectual tasks are increasingly given over to AI systems whose operations we cannot fully grasp. How can we derive the benefits of AI while retaining, and enhancing, what is of value in our humanity?

As a Greek, how do you see Greece, becoming an equal player in the AI economy.

I think Greece has to mobilise its two great strengths, its incomparable cultural inheritance, and its wonderful people, who are the present-day bearers of that inheritance, especially the young people who always impress me so profoundly whenever I visit Greece. I believe that what I will call the ‘Platonic-Aristotelian’ approach to ethics has a lot to teach us today. Let me give two illustrations.

First, modern ethical thought tends to drive a wedge between what advances οur personal well-being and what is morally required. Ethics is often seen as a constraint on the pursuit of human well-being. I think the Aristotelian idea of ‘eudaimonia’ is a powerful corrective to this view. It teaches us that (contrary to Kant and other modern thinkers) you cannot disconnect ideas of morality from ideas of personal flourishing. The two have to be integrated, hence Aristotle’s flourishing human being is someone who has moral virtues such as justice and courage. And one lesson for AI ethics is that we shouldn’t merely think of ethics as a set of constraints or prohibitions on the development of AI, e.g. constraints about respecting privacy or other rights. We should also raise the ethical question of whether the goals is AI being developed to fulfil are genuinely worthy goals, goals that advance the interests of humankind. And it won’t suffice to answer that question by saying that the goals fulfil someone’s subjective preferences or that they generate more wealth.

The second illustration I will give is the importance that the Platonic-Aristotelian tradition places on human judgment – the practical wisdom of the phronimos. This is a powerful counterweight to the formalist idea that our understanding of the good can be reduced to a system of rules that can be applied mechanically. The formalist picture gets a lot of support from the tremendous cultural authority of science in the modern age, but as Aristotle stressed, we should demand only the level of precision that is appropriate to each subject-matter, and ethics is a fundamentally different subject from science. The subject-matter of the ethical is ‘indefinite’, as Aristotle says, hence his great discussion of equity in legal adjudication, which concerns the importance of knowing when to depart from a generally good law in order to achieve justice in the particular case. On this view, the ultimate locus of ethical wisdom is not in a set of pre-defined rules (or algorithms), but in a certain kind of character (ethos), sustained by an appropriate upbringing and social environment. If we think of ethics in this way, we are better placed to resist excessively delegating of our decision-making to algorithms embedded in AI systems.

Greece is the geographic and cultural home of this profoundly rich humanistic ethic. I would love to see it do more to leverage this ‘soft power’ in order to help AI evolve in an ethically sound way, but also more generally to helping our species address the huge existential challenges it confronts.

Which are your current goals, as a Professor and the Director of the AI and Ethics Institute.

The aim of the Institute we have etablished at Oxford is to enhance the quality of the ethical discussion around the profound challenges and opportunities created by the development of Artificial Intelligence. We aim to do so in a way that grounds ethical discussion in the long philosophical tradition of thought about human well-being and morality, all the way back to the ancient Greeks. Oxford has long had one of the pre-eminent philosophy faculties in the world, and the Institute is deeply embedded in the philosophy faculty. Moreover, we aim to enrich this philosophical approach by extensive dialogue and collaboration with colleagues in the humanities more broadly, as well as in the social sciences and the natural sciences. Key to the Institute’s activities has been fostering a strong intellectual relationship with members of Oxford’s world leading department of computer science.

But beyond this purely academic activity, we are very much committed to fostering dialogue not only with key players in the realm of AI policy – such as governments, NGOs, corporations, international organisation, etc – but also with ordinary citizens because, as Aristotle put it, sometimes the person who lives in a house knows more about it than the architect who designed it, and we are all increasingly living in the house of AI. In pursuing these goals, I very much hope we will be able to create fruitful links with Greek academics, entrepreneurs, and policy-makers. We recently had Ms Eva Kaili MEP visit us in Oxford where she gave a brilliant talk about the EU’s proposed AI Regulation; I was delighted to become chair of the advsiory board of ELONTech, which is doing great work exploring the significance of AI for values such as the rule of law, and we are in ongoing discussions with the Greek government about future collaboration.

![Ψηφιακά στοιχεία διακίνησης αποθεμάτων [1ο Μέρος]](https://www.ot.gr/wp-content/uploads/2025/07/aade7-8-768x602-1.jpg)